Decoding the Temporal Enigma: What is the Temporal Model in AI?

Unraveling the Time Dimension in Artificial Intelligence

Ever get the feeling that artificial intelligence sometimes misses the bigger picture, like it sees a single frame but not the entire movie playing out? That’s where temporal models enter the scene. Consider them the AI’s way of grasping that events unfold in a specific order, that what happened before influences what happens now, and that there’s a flow to all this digital activity. Without this understanding of time, AI would be stuck in a perpetual present, unable to comprehend the rich context of sequences.

Essentially, a temporal model in AI is a type of neural network or statistical framework specifically designed to handle sequences of data where the order and timing of individual pieces are critical. Unlike simpler models that treat each data point as isolated, temporal models recognize the inherent connections within a series. This could be anything from the words forming a sentence to the variations in a stock price or the individual pictures in a video. It’s all about understanding the “when” and the “what preceded it.”

Imagine trying to understand a joke if the punchline was delivered first. It simply wouldn’t make sense, would it? Temporal models help AI avoid similar logical (or, more accurately, functional) errors by learning the patterns and relationships that develop over time. They enable AI to make sense of dynamic systems, forecast future outcomes based on past trends, and even generate sequences that are coherent and contextually appropriate. It’s like giving AI a sense of history and anticipation, which, let’s be honest, makes it significantly more useful (and less prone to revealing the punchline prematurely).

So, whether it’s predicting the next word you’re about to type, analyzing the subtle changes in your heart rate, or forecasting the weather patterns, temporal models are operating behind the scenes, diligently tracking the rise and fall of information. They are the often-unseen heroes of many advanced AI applications, quietly adding the essential element of time to the intelligence equation.

The Inner Workings: How Temporal Models Learn from Time

Delving into the Mechanisms of Sequential Data Processing

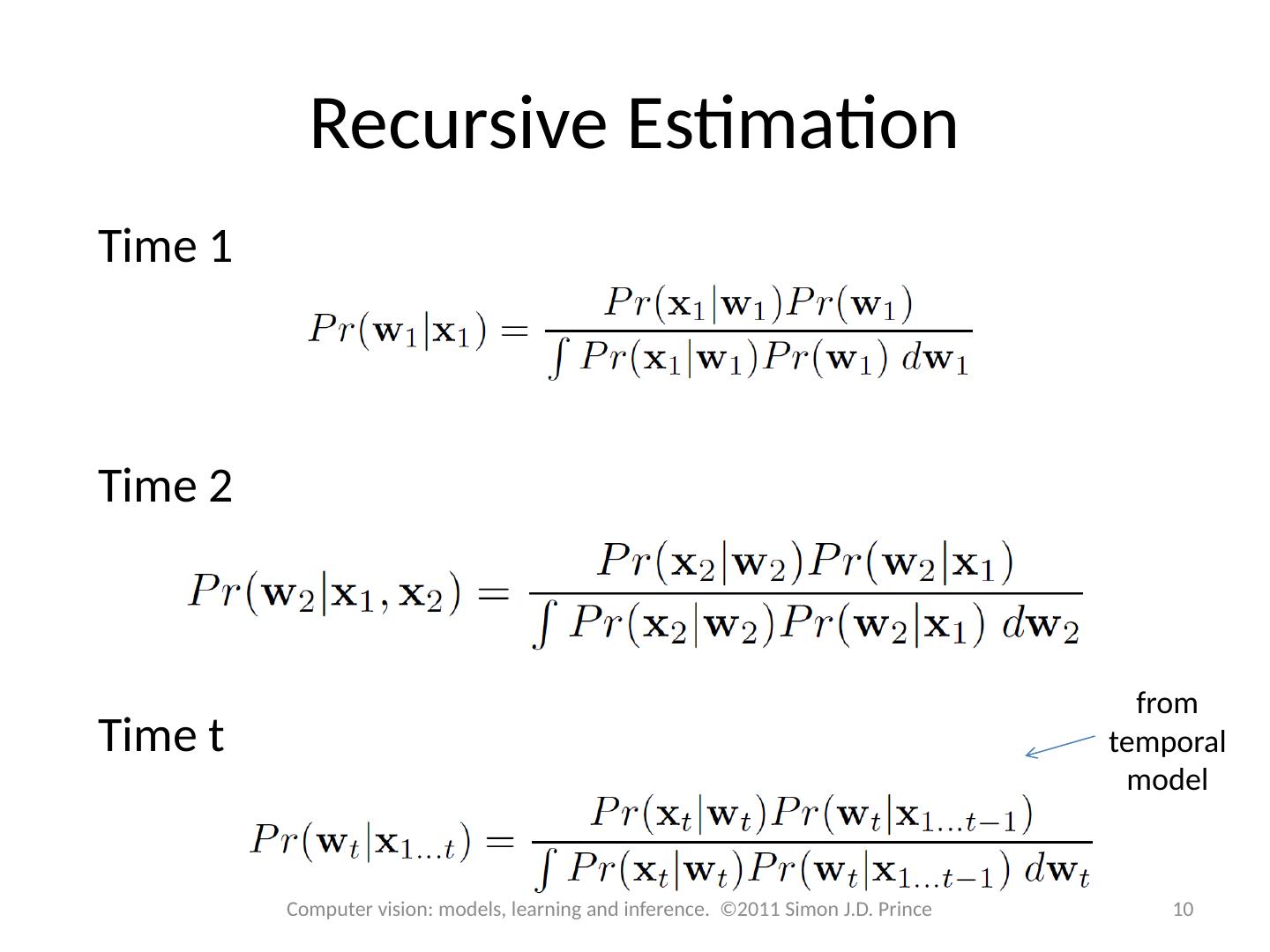

Now that we have a clearer understanding of the goal of temporal models, let’s take a closer look at how they actually manage to learn from the continuous progression of time. One of the foundational and widely used types of temporal models is the Recurrent Neural Network (RNN). In contrast to feedforward networks that process information in a single direction, RNNs incorporate feedback loops that allow information to persist across different points in time. Think of it as having a short-term memory that enables the network to recall what it has processed earlier.

This “memory” is vital for understanding sequences. For example, when processing a sentence, an RNN can utilize the words it has already encountered to better interpret the meaning of the current word. However, basic RNNs can face challenges with very long sequences, a problem known as the vanishing gradient. This is where more advanced architectures like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) come into play. These models incorporate mechanisms called “gates” that can selectively manage the flow of information, allowing them to retain relevant details over extended periods and discard less important information. It’s akin to having a much more efficient and discerning memory.

Beyond RNNs and their variations, other approaches to temporal modeling exist. Transformer networks, although initially developed for understanding human language, have also proven highly effective in handling sequential data. They employ a mechanism called “attention” which allows the model to weigh the significance of different parts of the input sequence when making predictions. This can be particularly useful for capturing long-range dependencies without the limitations of recurrence.

Ultimately, the selection of a temporal model depends on the specific task and the characteristics of the data being analyzed. Whether it’s the cyclical connections of an RNN, the memory cells of an LSTM, or the attention mechanisms of a Transformer, the underlying objective remains the same: to enable AI to understand, predict, and generate sequential data in a manner that respects the fundamental dimension of time. It’s a complex interplay of algorithms and data, all working together to provide AI with a more comprehensive understanding of our constantly evolving world.

Applications in the Real World: Where Temporal Models Shine

Witnessing the Impact of Time-Aware AI Across Industries

So, where do these AI models that understand time actually make a tangible difference in our daily lives? The applications are surprisingly broad and continue to expand as the field progresses. Natural Language Processing (NLP) stands out as a key area. Temporal models are fundamental to tasks like machine translation, where the order of words is crucial for accurate meaning; text summarization, which requires understanding the flow of information within a document; and sentiment analysis, where the context of words within a sentence or paragraph is essential. Even the virtual assistants we interact with rely heavily on temporal models to understand our requests and generate coherent responses. It’s all about deciphering the timeline of language.

Beyond language, temporal models are bringing about significant changes in fields like finance. They are employed for forecasting stock prices, detecting fraudulent activities (by identifying unusual sequences of transactions), and managing financial risks. The ability to analyze historical financial data and identify temporal patterns is invaluable for making informed decisions and minimizing potential losses. Similarly, in healthcare, temporal models can analyze patient health records over time to predict how diseases might progress, personalize treatment approaches, and even anticipate outbreaks. Understanding the temporal dynamics of health data can lead to more proactive and effective healthcare strategies.

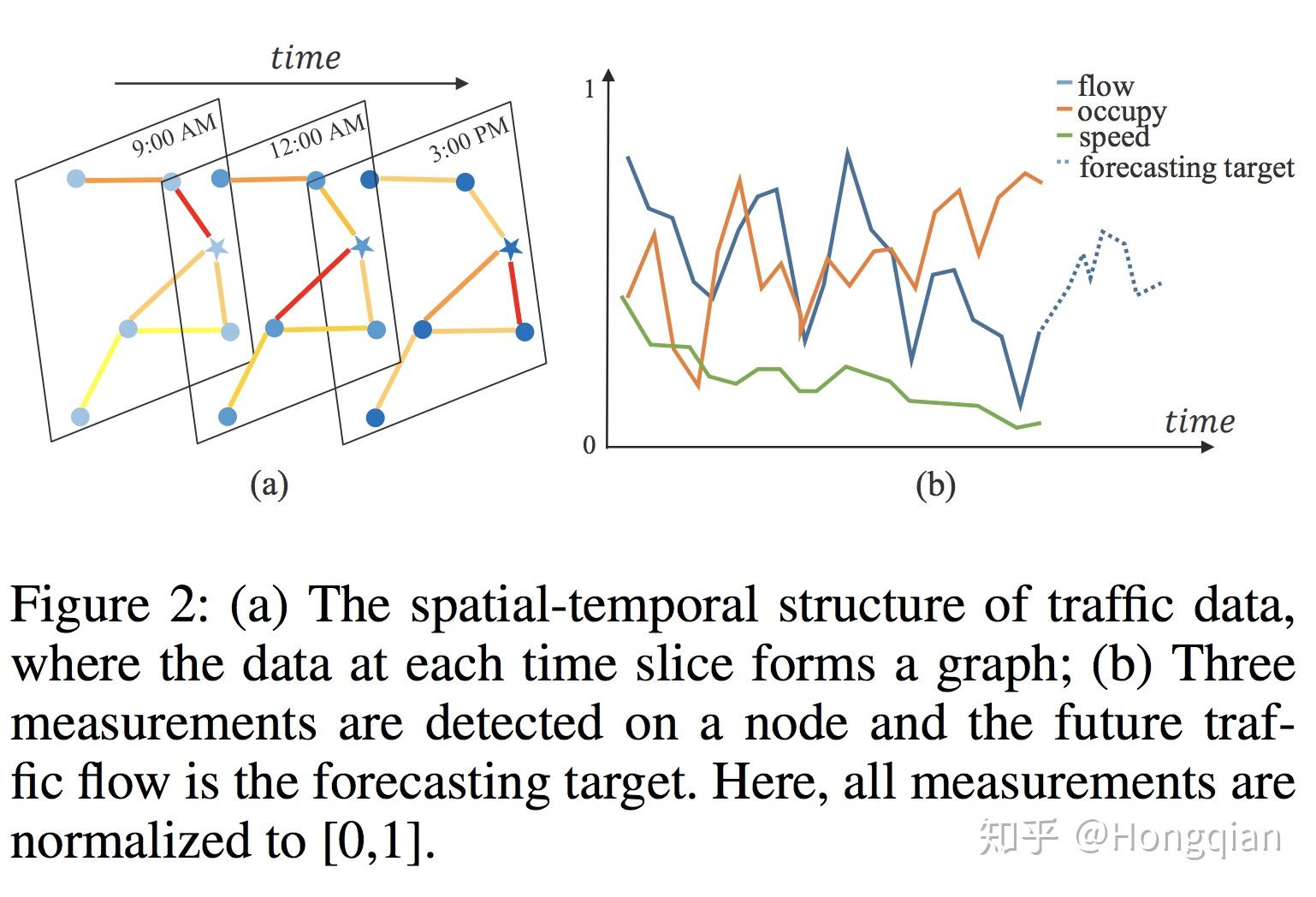

Consider the realm of video analysis. Temporal models enable AI to interpret actions and events as they unfold within a video sequence. This has implications for surveillance (detecting unusual behaviors), autonomous vehicles (predicting the movements of other vehicles and pedestrians), and even sports analysis (tracking player movements and the flow of the game). The capacity to see beyond individual frames and understand the temporal relationships between them is transforming how we interact with and analyze visual information.

From suggesting your next favorite show based on your viewing history (another triumph for temporal patterns!) to optimizing industrial processes by predicting when equipment might fail based on sensor data collected over time, temporal models are quietly powering a vast array of technologies. They are the key to extracting valuable insights and making predictions in any area where data has a time-based component, which, when you think about it, is almost everything. The future is sequential, and temporal models are at the forefront of this understanding.

The Challenges and the Future: Navigating the Temporal Landscape

Addressing Current Limitations and Exploring Emerging Trends

Despite their remarkable capabilities, temporal models still face certain challenges. Training these models, particularly the more intricate architectures like LSTMs and Transformers, can demand significant computational resources and large datasets of labeled sequential data, which can be difficult and time-consuming to obtain. Handling long-range dependencies, where events that occur far apart in a sequence influence each other, remains a complex problem, although the development of attention mechanisms has brought about considerable progress.

Another area of ongoing development is the interpretability of temporal models. While they can often produce accurate predictions, understanding the specific reasons behind a particular prediction based on the temporal patterns they have identified can be challenging. This lack of transparency can be a concern in critical applications where trust and explainability are essential. Researchers are actively working on developing methods to better understand the internal decision-making processes of these complex models.

Looking ahead, the field of temporal modeling in AI is full of exciting possibilities. We can anticipate the emergence of even more advanced architectures, potentially combining the strengths of different existing approaches. There is also a growing emphasis on developing more efficient and robust techniques for training these models with smaller amounts of data. Furthermore, the integration of temporal models with other AI techniques, such as reinforcement learning and causal inference, holds significant promise for tackling complex real-world problems that involve both sequential dependencies and decision-making over time.

The ability of AI to comprehend and reason about time is becoming increasingly vital in our increasingly dynamic and interconnected world. As we generate more and more data that unfolds over time, the need for powerful and efficient temporal models will only continue to increase. The journey of fully understanding the temporal enigma is ongoing, and the innovations on the horizon hold the potential to unlock even deeper insights into the flow of time and its profound impact on our universe.

Frequently Asked Questions (FAQ)

Your Burning Questions About Temporal Models Answered

Q: So, if a regular AI looks at a photo, does a temporal AI look at a video?

A: That’s a very insightful way to think about it! While a standard image recognition AI analyzes a static image, a temporal model can analyze a sequence of images (a video) and understand how things change from one moment to the next. It’s like the difference between seeing a single still and understanding the entire scene as it plays out.

Q: Are temporal models only used for really complicated things? Like predicting the stock market?

A: Not necessarily! While they are certainly used for intricate tasks like financial forecasting, temporal models are also at work in more common applications. Think about your phone suggesting the next word as you type — that’s a temporal model in action, predicting based on the sequence of words you’ve already entered. Even your music streaming service recommending songs based on your listening history utilizes temporal patterns.

Q: If these models are so good at understanding sequences, can they predict exactly when my coffee will be perfectly brewed?

A: While that would be incredibly convenient, predicting the precise moment of coffee perfection is still a bit beyond the current scope of most temporal models (there are just too many unpredictable factors!). However, in more controlled systems, like predicting temperature fluctuations in a machine over time, temporal models can achieve impressive accuracy. So, while your coffee maker might remain a bit of a mystery for now, the future possibilities for temporal applications are extensive!

The Temporal Model Outlined By Gibbons Et Al. (1984). Source

Figure 6.1 From Analysis Of The Temporal Model Perceived Control In

Figure 9 From A Temporal Model For Interactive Multimedia Scenarios

Aimodel Inferencing. Practical Deployment Approaches &… By Andi Sama