Top Notch Tips About Are Llm Deterministic Or Stochastic

Decoding the Deterministic Dance of Language Models: Are LLMs Predictable or Prone to Randomness?

Unraveling the Core Nature of Large Language Models

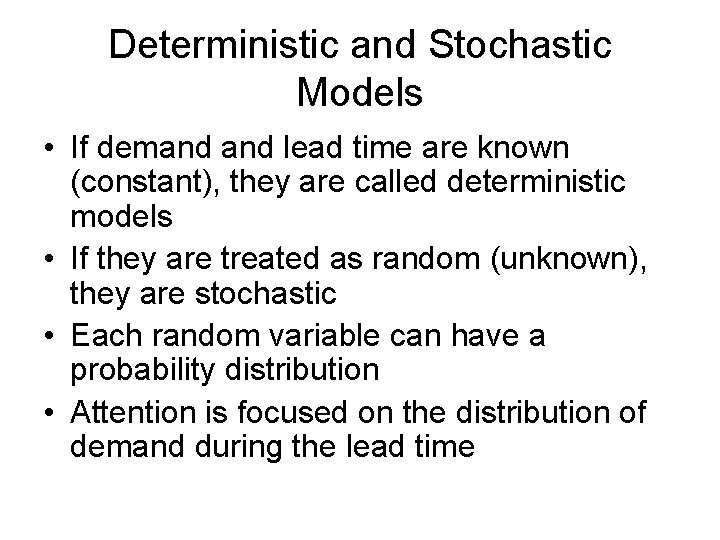

The question of whether Large Language Models (LLMs) operate with absolute predictability or possess an inherent element of chance is central to grasping their abilities and limitations. It's akin to pondering if a meticulously followed recipe will invariably produce the identical baked good, or if a touch of unforeseen magic influences each outcome. Essentially, a system operating deterministically, when presented with the same input, will always yield the same result. Consider a basic calculator: the sum of two and two will consistently be four. Conversely, a stochastic system incorporates elements of randomness, implying that the output can differ even when the inputs are identical. So, where do our sophisticated language models fit within this spectrum?

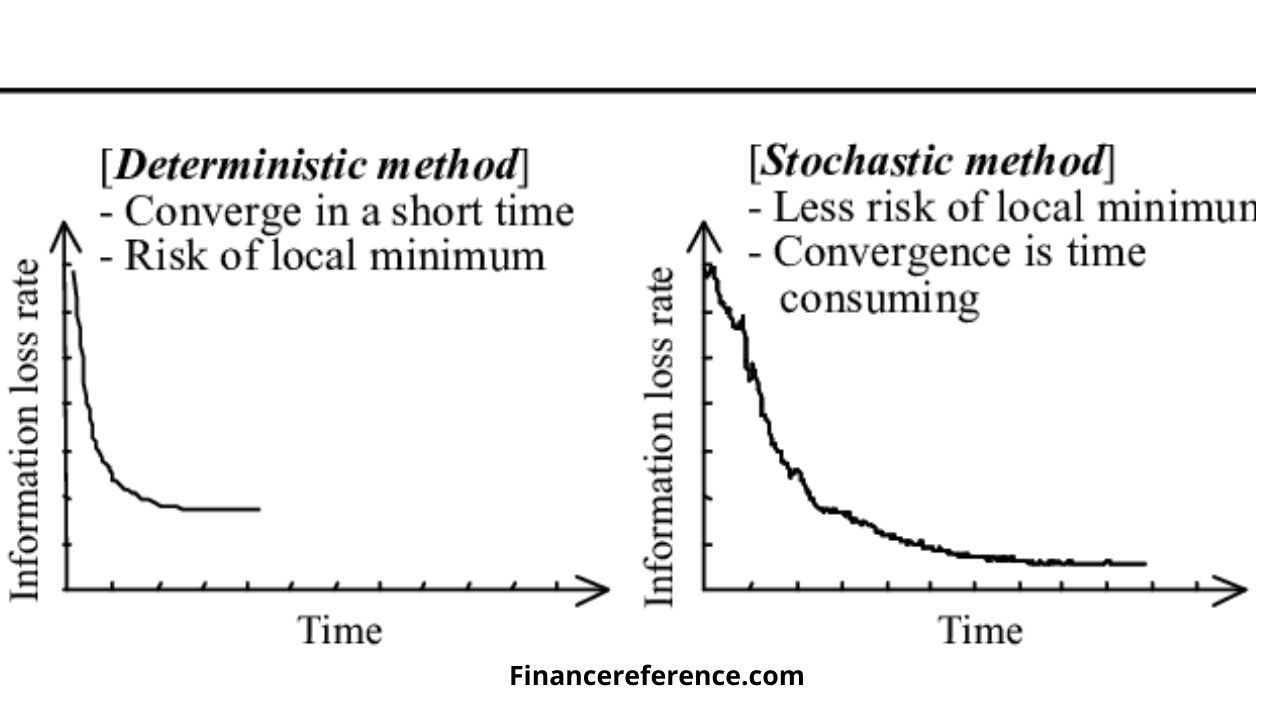

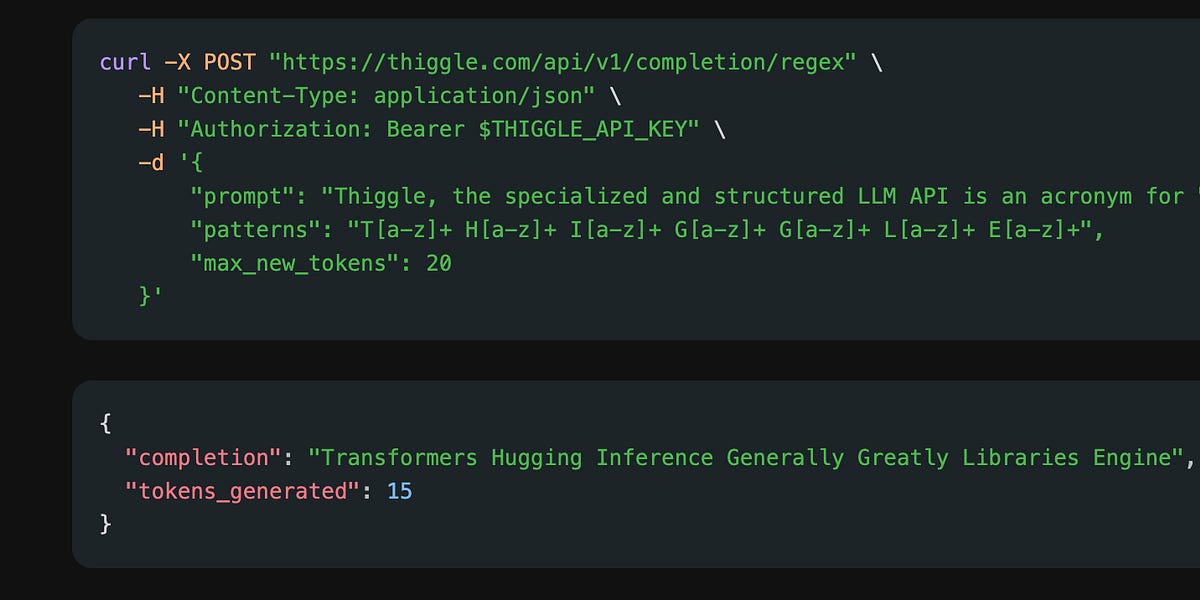

At their fundamental level, many LLMs are architected to function in a largely deterministic manner. The primary mechanism involves forecasting the subsequent word in a sequence based on the preceding words and the extensive datasets they have been trained upon. This prediction is frequently rooted in probabilities assigned to various words. However, the selection of the "most probable" word can be modulated by a significant parameter: temperature. It is this temperature setting that introduces the element of stochasticity.

Imagine the LLM contemplating several potential next words, each associated with a specific probability score. A low temperature setting encourages the model to select the word with the highest probability, leading to more consistent and predictable outputs. This is often preferred when factual information or a straightforward answer is required. Increase the temperature, however, and the model becomes more exploratory, more inclined to choose less probable words. This introduces an element of randomness, resulting in more imaginative, and occasionally, more unexpected outputs. It's comparable to giving the AI a gentle nudge to think beyond conventional boundaries, or perhaps, to engage in a bit of linguistic experimentation.

Therefore, the situation is not a simple binary choice. LLMs possess a deterministic core, driven by their training data and the probabilistic nature of language. Nevertheless, the inclusion of parameters such as temperature allows for a degree of stochasticity to be integrated into their responses. This combination is what renders them so versatile, capable of generating both factual reports and creative narratives.

The Temperature Tango: How Randomness Influences LLM Output

Exploring the Spectrum from Predictable Precision to Creative Chaos

The "temperature" setting within an LLM functions like a control dial, regulating the level of randomness in its generated text. At one end of the spectrum, a low temperature (approaching zero) renders the model highly deterministic. It will consistently opt for the most probable next word based on its training, resulting in predictable and often quite conventional responses. Think of it as the model adhering strictly to a script, ensuring accuracy and coherence in tasks such as summarization or code generation, where precision is paramount.

As the temperature is gradually increased, the model begins to consider less probable words. This introduces an element of chance, leading to more varied and potentially innovative outputs. The model becomes less certain about the single "best" answer and starts investigating alternatives. This can be particularly useful for tasks like brainstorming sessions, creative writing endeavors, or generating diverse perspectives on a given subject. It's akin to allowing the model to relax its formal constraints and engage in a more free-flowing style of thought.

However, this heightened stochasticity comes with a trade-off. While creativity may flourish, so too does the potential for the model to generate nonsensical or factually incorrect information. The further the temperature is raised, the greater the likelihood that the model will deviate from the path of logical coherence. It's comparable to giving a brilliant but slightly inebriated orator the floor — the results can be intriguing, but not always entirely dependable.

Understanding the role of temperature is vital for effectively utilizing LLMs. Knowing when to maintain a low temperature for accuracy and when to elevate it for creativity enables users to harness the full potential of these powerful tools. It's a delicate balancing act, a continuous negotiation between predictability and the delightful unpredictability that can spark innovation.

Deterministic Underpinnings: The Role of Training Data and Algorithms

Examining the Foundations of LLM Predictability

Despite the influence of stochastic elements like temperature, the fundamental architecture and training methodology of most LLMs are heavily inclined towards determinism. The extensive datasets they are trained upon provide a statistical comprehension of language, enabling them to predict the likelihood of word sequences. The algorithms that power these models are engineered to learn these statistical relationships and apply them consistently. Given identical input and the same model parameters, the core prediction mechanism will always operate in the same manner.

The training process itself aims to instill a robust sense of statistical probability. The model learns to identify patterns and associations between words, phrases, and concepts. This learning is a deterministic process, where the model's parameters are adjusted based on the training data in a mathematically defined way. Once trained, these parameters remain fixed (unless the model undergoes further fine-tuning), contributing to the deterministic nature of the core prediction engine.

Consider the training data as an immense library, and the learning algorithm as a meticulous librarian who organizes the books based on the frequency with which certain words appear together. When you pose a question to the model, it's like the librarian swiftly scanning the shelves to locate the most probable subsequent word based on the patterns they have observed. This process, at its core, is deterministic — the librarian will always adhere to the same organizational principles.

Therefore, while the output might exhibit some variability due to stochastic sampling methods, the fundamental operations of an LLM are rooted in deterministic algorithms and the statistical patterns acquired from its training data. This deterministic foundation ensures a degree of consistency and reliability in their responses, particularly when the stochastic elements are carefully managed.

The Blurry Lines: When Determinism Meets Stochasticity in Practice

Navigating the Nuances of LLM Behavior in Real-World Applications

In practical applications, the distinction between deterministic and stochastic LLM behavior can become somewhat indistinct. While the underlying mechanisms may be largely deterministic, the sheer complexity of language and the vastness of the model parameters can lead to outputs that seem surprisingly novel and even unpredictable. It's akin to a complex machine with numerous interacting components, where its overall behavior, while governed by deterministic rules, can still produce seemingly emergent and stochastic outcomes.

Consider the act of generating creative writing. Even with a low temperature setting, an LLM might produce a unique turn of phrase or an unexpected plot development simply due to the intricate interplay of the statistical relationships it has learned. While the selection of each word might be based on the highest probability given the context, the sequence of these high-probability words can still result in something that feels fresh and original. It's like a jazz improvisation — each note might be chosen based on musical theory (a deterministic framework), but the resulting melody can be unique and spontaneous.

Furthermore, the way prompts are formulated can significantly influence the output and the apparent level of stochasticity. A vague or open-ended prompt is more likely to elicit a wider range of responses compared to a highly specific and constrained one. The ambiguity in the input provides the model with more latitude to explore different possibilities within its learned statistical landscape, leading to what might seem like more stochastic behavior from an external viewpoint.

Ultimately, while LLMs possess a deterministic core, the interaction of their complex architectures, extensive training data, and adjustable parameters such as temperature results in a spectrum of behavior ranging from highly predictable to surprisingly creative. Understanding this nuanced relationship between determinism and stochasticity is crucial for effectively leveraging these powerful tools for a wide array of applications.

Frequently Asked Questions (FAQ)

Your Inquiries About LLM Predictability Addressed

Q: So, are LLMs truly random then?

A: Not in the absolute sense of a random event. While they can exhibit stochastic behavior, particularly with higher temperature settings, their responses are fundamentally rooted in the statistical patterns they learned from their training data. It's more akin to a controlled form of randomness, guided by probability.

Q: Why might one want to make an LLM more stochastic?

A: Increasing the stochasticity (by raising the temperature) can be advantageous for tasks that demand creativity, brainstorming, or generating diverse options. It allows the model to explore less obvious possibilities and produce more novel and unexpected outputs. Think of it as encouraging its imaginative capabilities!

Q: Can a deterministic LLM ever produce something new?

A: Certainly! Even with a deterministic foundation, the vast amount of information LLMs are trained on enables them to combine existing knowledge in innovative ways. While the underlying word selection might be based on probabilities, the resulting combinations and sequences can still be surprisingly original. It's like a skilled artist using familiar pigments to create an entirely new masterpiece.

![Deterministic Effects (Tissue Reactions) and Stochastic Effects [MOE]](https://www.env.go.jp/en/chemi/rhm/basic-info/2021/img/img-03-01-04.png)